Uncommon Ground

Robert Porzel, University of Bremen.

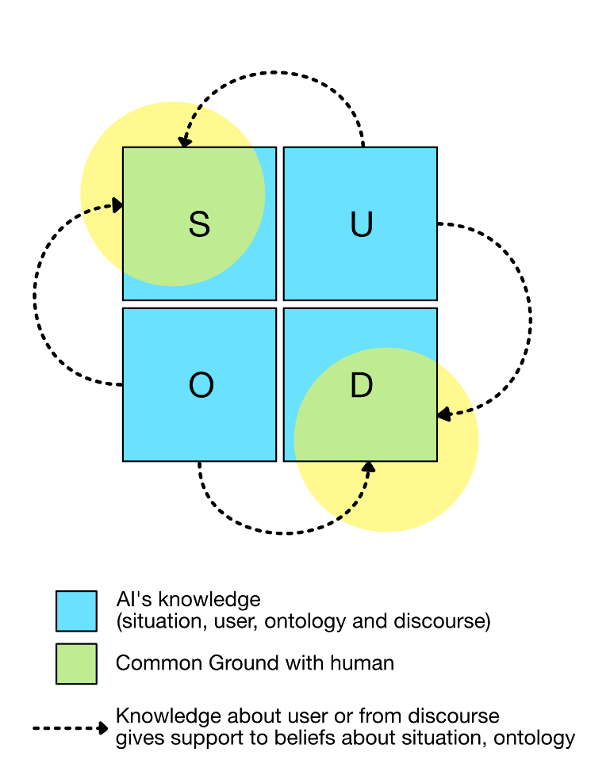

One major motivation of explainable artificial intelligence (XAI) is the desire to make the predictions of black-box machine learning (ML) models more transparent. Adadi and Berrada conducted a survey of the XAI literature and created an overview of common XAI methods - all of the methods presented range over the internal mechanisms of a ML model [Adadi and Berrada, 2018], which can be called introspective explanations. More recently, there has been an increasing need to explain AI behavior to non-expert users of AI systems. Therefore, XAI needs to focus more on end-users as the recipients of the explanations and on how XAI methods can be evaluated from a human-centered point of view. This sheds a different light on estimating the quality of an explanation and how it can be tailored adequately to the given user. This view is supported by the argument that explanation should be understood as interactive conversations. The usefulness of explanations can depend on the context in which they are given, including the situation and the user. In domains such as everyday activities, human users interact with AI agents repeatedly over time. When the two disagree, helpful and succinct explanations of the AI’s behavior should be tailored to the user based on its memory of their previous interactions.

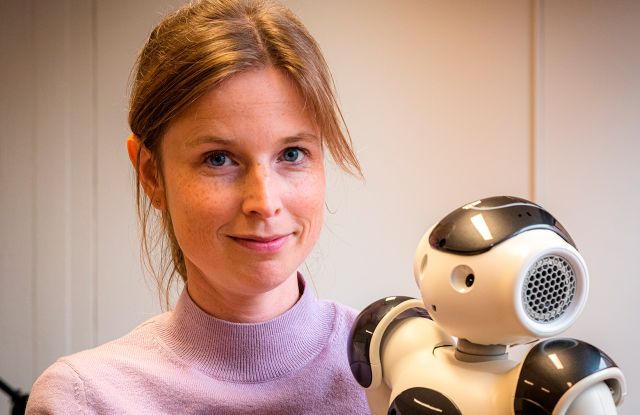

To foster a human-centered approach to XAI, individuals and their preferences should be considered by appropriate user models. These can encompass, e.g., a level of education, cultural background, familiarity and interest in technology, perception of the agent, mood, and social settings. To accommodate these factors, personalization of AI explanations might be crucial. This is particularly relevant in domains where users interact with the same artificial agent over a long period, such as smart home devices, robots in household and care domains, and other personal AI applications. If users ask for explanations because they expected different AI behaviour, there must be some disagreement between users and AI in their respective beliefs and knowledge, which is what we call their uncommon ground. Explanations should allow the user to realize where the uncommon ground is. In error cases, this can empower users to correct the AI’s behaviour for future interactions; in other situations, it can enable them to see that they are missing important knowledge themselves. To facilitate this, explanations by the AI should reflect its experience from previous interactions with the same user. Personalization can improve user satisfaction by using user models created with direct or indirect user input, i.e., user entry or automated systems that adapt to user behaviour. Although direct user inputs can have advantages, an agent that is not properly configured may be less acceptable, thus personalization through indirect adaptation might be preferential. We argue that it is thus necessary to consider how to generate extrospective explanations: explanations that are given with respect not only to the system itself but also take into account what the system knows about the user’s expectations and draw on experience from earlier interactions. This extrospective perspective on XAI is at the heart of our work, as we focus on domains where users interact with smart software, intelligent devices, and autonomous agents/robots repeatedly over time, e.g., smart home device and household. This, in a sense, entails that users have their ‘own’ personalized AI. Explanations in the context of repeated interactions have not been studied as much. Therefore, we seek to examine this challenging problem systematically. There is a growing awareness in the XAI community that explanations ought to be considered and evaluated in the context of the user for whom they are intended. Previous works have pointed out the problem of implied-but-not-stated contrastive/counterfactual cases when humans ask for explanations as well as that there are many situations in which the user’s goal in asking for an explanation relies on understanding why the AI prediction differed from their expectation.

We claim that in the domain of AI in the home (e.g., smart home devices, household robots), extrospective explanations can provide more helpful information for end-users in a more human-centered way, enabling them to understand the reason for unexpected agent behaviour. We aim to achieve this by focusing on explanations for transparent, reasoning-based AI systems and explicitly modelling user and discourse context in the agent’s knowledge. If the AI predicts the same out- come based on the same situational knowledge and ontology in two equivalent situations, the respective explanations could differ based on the user asking for them: For each user, the AI would output only a part of their reasoning. Specifically, the AI should output those facts or inferences that it expects are most likely to surprise the user. This should be whatever fact or logical inference the AI has the least reason to believe that the user agrees with or is aware of. In other domains, however, there might be different reasons why explanations should be purely introspective. There are many areas of life where AI is or will be used, in which it will be important that explanations are consistent across different users or that they are a complete representation of the AI reasoning (e.g., medical or legal problems). Therefore, it will be essential to distinguish between explanations aimed at helping individual users understand local, surprising AI predictions and explanations aimed at increasing ML systems’ transparency.

[Adadi and Berrada, 2018] Amina Adadi and Mohammed Berrada. Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). IEEE Access, 6:52138–52160, 2018.

Full Paper in the papers page:

Laura Spillner, Nima Zargham, Mihai Pomarlan, Robert Porzel and Rainer Malaka. Finding Uncommon Ground: A Human-Centered Model for Extrospective Explanations. In Proceedings of the 2023 IJCAI workshop on Explainable Artificial Intelligence (XAI). 2023. BibTeX, pdf

More Articles

Do you speak AI?

Pragmatics: the secret ingredient

Deconstructing Recipes

Foundations for Meaning and Understanding in Human-centric AI

The FCG Editor: a new milestone for linguistics and human-centric AI

Linguistic Alignment for Chatbots

Framing reality

MUHAI Visual Identity