Deep Understanding of Everyday Activity Commands

Robert Porzel, Rainer Malaka, et al., UHB.

Performing household activities such as cooking and cleaning have, until recently, been the exclusive provenance of human participants. However, the development of robotic agents that can perform different tasks of increasing complexity is slowly changing this state of affairs, creating new opportunities in the domain of household robotics. Most commonly, any robot activity starts with the robot receiving directions or commands for that specific activity. Today, this is mostly done via programming languages or pre-defined user interfaces, but this changes rapidly.

For everyday activities, instructions could be given either verbally from a human or through written texts such as recipes and procedures found in online repositories, e.g., from wikiHow. From the perspective of the robot, these instructions tend to be vague and imprecise as natural language generally employs ambiguous, abstract, and non-verbal cues. Often, instructions omit vital semantic components such as determiners, quantities, or even the objects they refer to.

Still, asking a human to, for instance, "take the cup to the table" will typically result in a satisfactory outcome. Humans excel despite many uncertain variables existing in the environment: for example, the cups might all have been put away in a cupboard or the path to the table could be blocked by chairs.

In comparison, artificial agents lack the same depth of symbol grounding between linguistic cues and real world objects, as well as the capacity for insight and prospection to reason about instructions in relation to the real world and the changing states of that world. In order to turn an underspecified text, issued or taken from the web, into a detailed robotic action plan, various processing steps are necessary, some based on symbolic reasoning and some on numeric simulations or data sets.

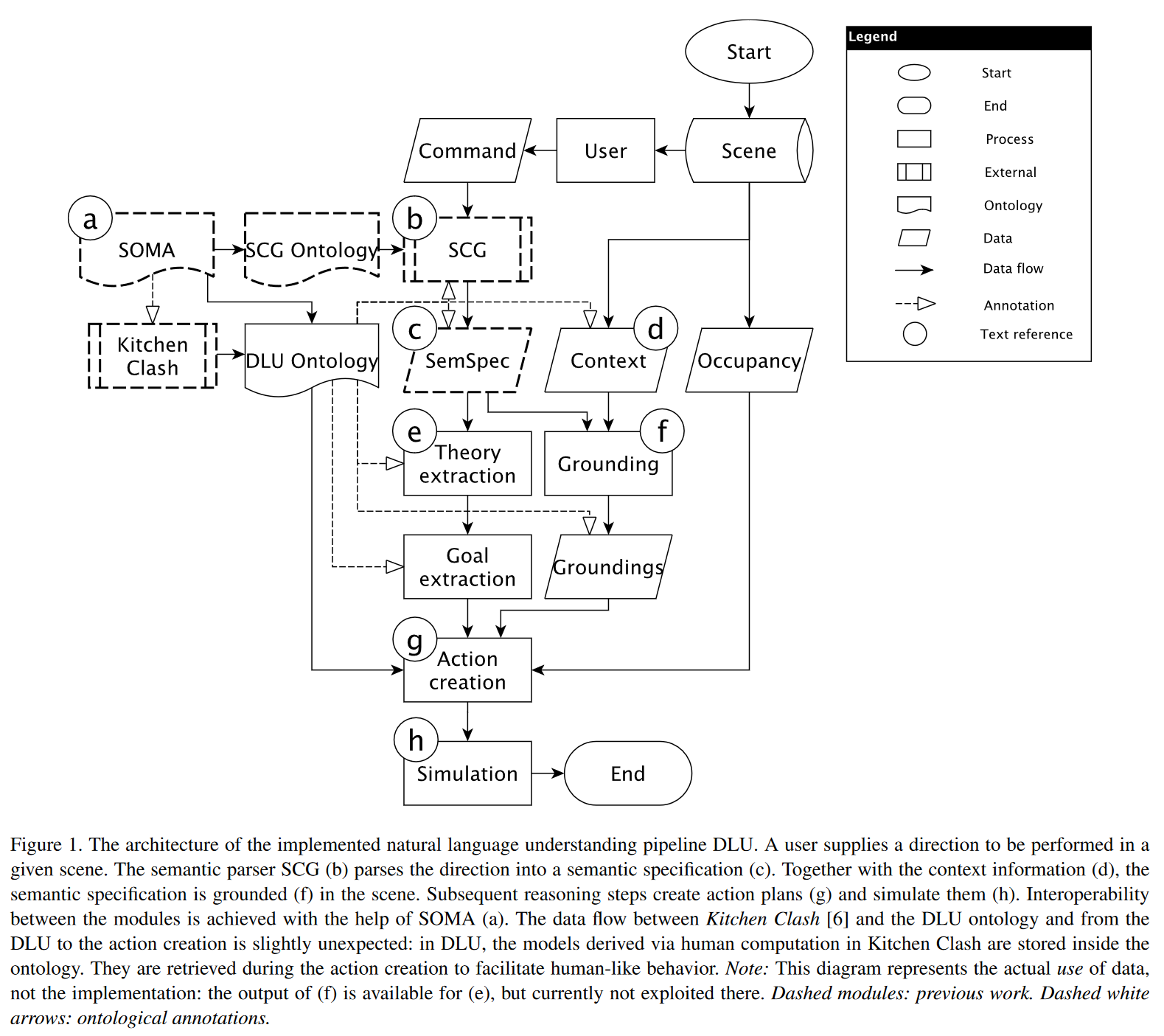

The research question the MUHAI project, therefore, encompasses the following question: how can ontological knowledge be used to extract and evaluate parameters from a natural language direction in order to simulate it formally? The solution proposed in this work is the Deep Language Understanding (DLU) approach. This approach employs the ontological Socio-physical Model of Activities (SOMA), which serves not only to define the interfaces of the multi-component pipeline but also to connect numeric data and simulations with symbolic reasoning processes.

Directions, instructions delivered textually, and commands, delivered verbally, are special instructions as they always demand an action. In contrast, other instructions can also be descriptions or specifications of circumstances. For example, "take the cup to the table" demands the addressee to perform an action. Sentences such as "knives must be placed to the right of the plate" or "the onions should be brown after 20 minutes" might entail directions or commands, but they do not explicitly call to action.

Here, the focus lies on directions as an important special case of instructions. More specifically, we first look at directions in which a trajector needs to be moved by an agent along a trajectory, thus directions which can be described using a Source-Path-Goal schema. For this we integrated a natural language understanding system which is capable of simulating natural language directions using a formal ontology as a common interface for individual components. Through this work we showed that the architecture is successful in simulating an everyday activity task.

Illustration:

Online Demonstration:

DLU runs on a live instance at https://litmus.informatik.uni-bremen.de/dlu/

Video Demonstration:

The video is available at https://osf.io/t2mnw/

Open Data:

The source code, software, and hardware information used to build and run DLU are available at https://osf.io/nbxsp/

The ontologies and their metrics are available at https://osf.io/e7uck/

Credits

Intro photo created by kjpargeter - www.freepik.com

More Articles

Can Robots Cook? Culinary challenges for advancing artificial intelligence

Anaphora Unveiled: Tracking Culinary Transformation in the Tech-Driven Kitchen

From Kitchen to AI: A Task-based Metric for Measuring Trust

Narrative Objects

Curiosity-Driven Exploration of Pouring Liquids

Toward a formal theory of narratives