Can Robots Cook? Culinary challenges for advancing artificial intelligence

Alexane Jouglar, University of Namur.

Source: https://dictionary.cambridge.org/dictionary/english/cook

Cooking is an act that we perform in our everyday lives to produce (delicious) dishes ready to eat. Many machines exist to help humans cook: slow cookers, multi-cookers, pressure cookers… All these machines are really helpful, but they need the direct intervention of a human. Currently, It is not possible to give a recipe to a machine, place the machine in the kitchen, and ask it to cook the dish. The reason for this is that recipes include a lot of background and implicit knowledge that humans gradually acquire by practicing and interacting with the world and that cannot be understood from the only lecture of the recipe. Let’s take an example.

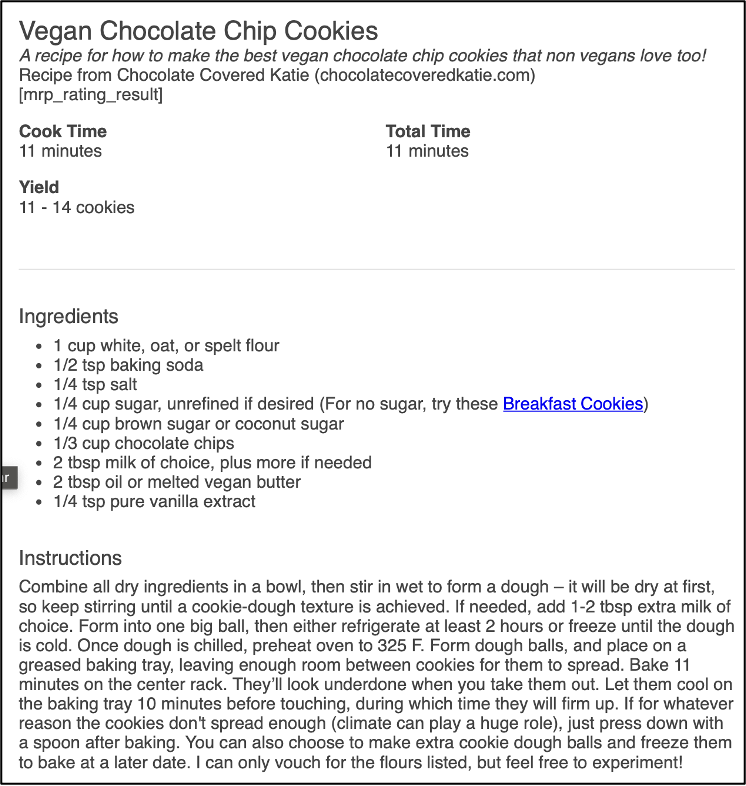

Source: https://chocolatecoveredkatie.com/vegan-chocolate-chip-cookies-recipe/

As we can see in the figure above, the recipe instructions start with “Combine all dry ingredients in a bowl”. As humans, we know what dry ingredients are. We also know that by “all”, the writer means “from all the ingredients that are listed above”. For a machine, this is a lot harder. It needs to be equipped with a lot of background knowledge. That's precisely the challenge posed by a groundbreaking new benchmark introduced in a recent paper published at LREC-COLING 2024:

Nevens, J., De Haes, R., Ringe, R., Pomarlan, M., Porzel, R., Beuls, K., & Van Eecke, P. (Accepted/In press). A Benchmark for Recipe Understanding in Artificial Agents. In The 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation

This benchmark is the result of a collaboration between the Vrije Universiteit Brussels (VUB), and the University of Bremen (UBremen) of the University of Namur (UNamur). It aims to evaluate whether artificial agents can understand and perform everyday cooking activities. It includes several components:

- Corpus of Recipes: A collection of 30 recipes of varying complexity.

- Procedural Semantic Representation Language: This language helps formalize 38 cooking actions in a way that machines can understand. It breaks down recipes into precise steps and provides a framework for representing cooking processes.

- Kitchen Simulators: Both qualitative and quantitative simulators recreate kitchen environments where agents can practice cooking. These simulators simulate the physical aspects of cooking, such as ingredient interactions and cooking times.

- Evaluation Procedure: A standardized method for evaluating agent performance.

The main task of the benchmark is to translate natural language recipes into a series of cooking actions that can be executed in the simulated kitchen to produce the desired dish. This translation process requires the agent to reason over multiple factors, including the recipe text, the state of the simulated kitchen, common-sense knowledge, and domain-specific cooking knowledge.

Success in this benchmark requires a combination of natural language processing and situated reasoning. By mastering the art of cooking, artificial agents can become more versatile and helpful in various real-world scenarios.

Source: Canva

The introduction of this benchmark represents a significant step forward in the field of artificial intelligence. It challenges researchers to develop systems that can understand natural language and apply that understanding to practical tasks like cooking. As these systems improve, they have the potential to revolutionize various aspects of our lives, from personal assistance in the kitchen to industrial food production.

Discover the full paper in our dedicated section: Jens Nevens, Robin de Haes, Rachel Ringe, Mihai Pomarlan, Robert Porzel, Katrien Beuls and Paul van Eecke. A Benchmark for Recipe Understanding in Artificial Agents. In Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti and Nianwen Xue (eds.). Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024). 2024, 22–42

More Articles

Anaphora Unveiled: Tracking Culinary Transformation in the Tech-Driven Kitchen

From Kitchen to AI: A Task-based Metric for Measuring Trust

Narrative Objects

Deep Understanding of Everyday Activity Commands

Curiosity-Driven Exploration of Pouring Liquids

Toward a formal theory of narratives